31 January, 2008

My trustworthy MP3-player B-)

It's pretty cool working for an IT-/ISP-company, especially when you receive really cool christmas gifts, like this Creative Stone 1GB MP3-player :P

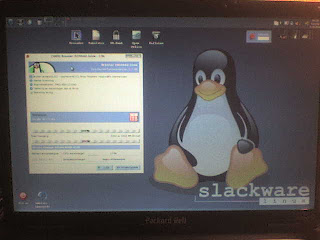

Slackware notebook

A mobile-shot of my notebook running KDE-3.5.7 on my vanilla Slackware Linux 12.0 setup. This is my build-machine which I use to build software packages, and sometimes it also serves as my test-machine for unconventional software, which usually makes it quite unstable compared to my stationary machine which I use as a sound-server, sound-mixer and file-server. And as you can see, I finally got my cell-phone to send pictures directly to my blog, man, I REALLY love technology ;D

Work

When you work at an ISP, slow nightshifts can be a bitch. Not that I'm complaining ;) I love working nights ;P So I REALLY love Thai Red Bull 150ml bottles, they keep my energy-reserves at a maximum! These little red rascals really pack a massive punch!

Also, they're quite tasty ;P Yum-yum!

Invictus

Black as the Pit from pole to pole,

I thank whatever gods may be

For my unconquerable soul.

In the fell clutch of circumstance

I have not winced nor cried aloud.

Under the bludgeonings of chance

My head is bloody, but unbowed.

Beyond this place of wrath and tears

Looms but the horror of the shade,

And yet the menace of the years

Finds, and shall find, me unafraid.

It matters not how strait the gate,

How charged with punishments the scroll,

I am the master of my fate:

I am the captain of my soul.

--William Henley (1849–1903)

UNIX

The title speaks for itself...

The Original Unix

http://en.wikipedia.org/wiki/Unix_philosophy

"Unix is simple. It just takes a genius to understand its simplicity."

– Dennis Ritchie

Dennis Ritchie invented the programming language; C, more commonly known in computer theory as a "System Implementation Language". With the aid of C, Dennis Ritchie, along with Ken Thompson, created the early versions of the original UNIX operating system at "Bell Labs".

Bell Labs was merged with AT&T in the early 80's, creating the company "AT&T Bell Laboratories". In 1996 AT&T spun off their technologies and organizations into "Lucent Technologies", but kept the services business within AT&T. But Lucent still used the line "Bell Labs Innovations" in their logo's. And in 2006, Alcatel and Lucent merged into the company "Alcatel Lucent".

Ritchie now works in the "Convergence, Software and Computer Science Laboratory" department for Alcatel Lucent, residing in the Bell Labs Laboratories building.

Just a brief little history of how much an IT company can reorganize and rebrand over a couple of decades, but Ritchie says it's been a good company and office to do his kind of work in despite all the administrative stuff.

Sections from the above text is taken from Ritchie's homepage, which also contains a lot of interesting material on UNIX and UNIX programming: http://www.cs.bell-labs.com/who/dmr/

The last official UNIX from Bell Labs is known to most UNIX fanatics today as: "System V", from which the pre-decessors, the "UNIX Time Sharing System (1-7)" also spawned the various "Berkeley Software Distribution(s)" available today (FreeBSD, OpenBSD, etc), often referred to as "BSD Unix" because it shared some initial codebase with the original UNIX by AT&T/Bell Labs. "UNIX System V" continued to release versions up to version 4 in 1988, but "UTSS" releases, went up to version 10 until 1989. "UNIX System V version 4" forked off both "Solaris" and the now commercially available "UnixWare" in the 90's. It is also the basis for later implementations of the "SysV runlevel init scripts" in BSD and Slackware Linux. Other Linux distro's also include the SysV init system, but a lot of them are heavily modified and customized.

A number of big corporations made their own proprietary UNIX flavours. IBM made the "AIX" system, SGI made the "IRIX" system and Hewlet Packard made the "HP-UX" system, of which I had some user-experience with while working in the WAN-sector.

Other Implementations

In 1987, the system called "MINIX" also saw the light of day, which is the source of inspiration for Linus Torvalds "Linux" announced in 1991. Linux was created on UNIX philosophy and design concepts, but NOT based, or forked in any way on the UNIX codebase what-so-ever. I cannot determine if MINIX is a complete original, or a fork. The "Linux-IS-UNIX" claim, is a common misconception, and probably the reason why so many UNIX codebase owners has tried to sue the Linux creator(s) the last decade, there was even written a report funded in large by Microsoft about the similarities between Linux and MINIX, almost accusing Linus for stealing his idea and basis for Linux entirely from MINIX, which turned out to be untrue (even noted by MINIX creator Andrew S. Tanenbaum on his MINIX website FAQ). On the other hand, MINIX is microkernel-based, and has absolutely nothing to do with the monolithic kernel base used in Linux, except for the fact that Linus used and studied MINIX while attending the University of Helsinki as he was developing Linux. AND.., MINIX started porting it's sourcecode to other archtitectures around 1991.., coincidence?

If you're confused about the word "kernel", you should read this.

If you're still confused, you probably won't understand most of this post at all.

Tanenbaum and Torvalds even had a well-known 'flamefest' in the early 90's concerning the "obsoleteness" of the monolithic kernel design in Linux, compared to Tanenbaum's microkernel implementation in MINIX.

Lawsuits

The early 90's witnessed USL (Unix System Laboratories) bring a lawsuit against BSDi (Berkeley Software Design) claiming that BSD contained illegally obtained code from the proprietary NET-2 UNIX codebase.

The mid 90's also saw a flow of lawsuits brought against Linux contributors (primarily IBM and Novell) from SCO, the company that owned the rights to the original UNIX codebase at the time, claiming they used their licensed and copyrighted Unix code in their contributions. The codebase is currently owned by Novell, as a result of the court-cases, the copyrights were solidified under Novell, the creator of SuSe Linux, which is in large parts based on my favourite Linux distro; Slackware Linux ;P

Other systems based on original Unix

Another useful link in this context would be the OS developed at Bell Labs/Lucent Technologies, named "Plan 9". The project goal was to create UNIX in it's simplicity as originally intended in the early development stages, but for use in the modern world. It also incorporated a couple of new concepts alongside the classic UNIX implementations. This was in the "Lucent Technologies" era of Bell Labs in the late 90's. The first and second release were strictly commercial, but in 2000, the third release was made under an open source license, and all commercial interest in the project dropped. The project is now in it's fourth release. http://en.wikipedia.org/wiki/Plan_9_from_Bell_Labs

And last, but not least, Dennis Ritchie created with the help of others, the last update of Plan 9, called "Inferno", which aims at providing a POSIX environment based on original UNIX concepts on a range of different devices, architectures and platforms (a wide specter of platforms actually: WinNT/2000/XP, Irix, Linux, BSD and Solaris). Worth mentioning about this project, is both the actual system changes themselves (filesystem,core), and it's "Dis Virtual Machine" kernel concept and it's accompanying programming-language "Limbo" which utilize JIT compilaion ("Just-In-Time", or "on-the-fly" compilation of object code). Inferno is also the first POSIX system from Bell Labs which seems successfull with the dual-licensing model (both commercial, "without copyleft" and Free Software (FSF,GPL), "with copyleft"). http://en.wikipedia.org/wiki/Inferno_%28operating_system%29

30 January, 2008

Min I/O

Et personlig resonnement om bruk og sikring av datamaskiner tilkoblet Internett i hverdagen.

Opprettet: 09.12.2007* Proprietær programvare, også kalt godseid programvare, er en samlebetegnelse på programvare som holdes ufritt av et kommersielt selskap. Også gratis programvare kan gå innunder dette begrepet; at programvaren er gratis, betyr ikke at den nødvendigvis er fri. Det er her mange misforståelser oppstår – særlig på engelsk, der «free» betyr både «gratis» og «fri».

IP-spoofing:

I datanettverk, brukes uttrykket IP-spoofing for når pakker blir laget med falsk kilde-adresse for å skjule identiteten av senderen, eller for å gi seg ut for å være fra et annet datasystem enn det som faktisk blir brukt til å sende ut pakken. Vanlig adresse-spoofing benyttes dersom angriperen ikke bryr seg om responsen til mottaker, eller angriper klarer å gjette seg frem til responsen mottaker sender ut til den falske adressen i pakkene. I visse tilfeller, er det mulig for angriper å se disse responsene, eller å rute responsen til sin egen maskin. Det mest vanlige tilfellet av dette er når angriper spoofer fra en adresse på samme LAN eller WAN (angriper sitter på nettverket til samme Internett Leverandør (ISP)). IP-spoofing brukes som regel når det utføres tjenestenekt-angrep mot verter.

Port-scanning:

En port-skanner, er mykvare designet for å "sniffe" et nettverk for verter med åpne kommunikasjons-porter. Dette blir ofte brukt av administratorer/analytikere/hackere for å sjekke sikkerheten på nettverket deres, og av crackere/kriminelle til å kompromittere det. Å port-skanne en vert er å skanne for flere åpne kommunikasjons-porter på samme vert. Å "port-sveipe" er å skanne flere verter om gangen for én spesifik åpen port. Sistnevnte brukes som regel for å finne flere verter med den samme åpne tjenesten for å misbruke tjenesten/porten til å utføre et masseangrep/tjenestenekt-angrep (mer om dette nedenfor).

Tjenestenekt-angrep

...og dusinvis med andre hacking metoder før pakkene endelig kjøres i kjeden for videresending til det interne LAN-et, og så mot ende-vertene.Tjenestenekt (Denial-of-Service, DoS) brukes innen Informasjons- og IT-sikkerhet for å beskrive et angrep hvor man hindrer at noen eller noe (f. eks. en person eller et system) får tilgang til informasjon eller ressurser de skal ha tilgang til. Et vellykket tjenestenektsangrep vil således føre til et brudd på tilgjengeligheten til informasjonen/ressursen. Tjenestenektangrep har vokst til å bli et problem på Internett, særlig distribuerte varianter (Distributed DoS, DDoS), hvor flere "slave"-maskiner (f.eks. "zombies") brukes til å angripe en eller flere maskiner via nettverket. Alle disse maskinene samlet vil ha større båndbredde enn offeret, noe som utnyttes til å sende så mye data til offeret at legitim trafikk ikke vil komme igjennom. Et tjenestenektangrep må ikke nødvendigvis utføres via et nettverk. Det er mulig å lage programmer som ikke gjør annet enn å kopiere seg selv (såkalte "Fork Bombs"). Etter kort tid vil prosessoren bli overarbeidet og systemet vil stoppe.

** I datasammenheng, er en "SPI"-brannvegg (en hvilken som helst brannvegg som utfører uttrykksfull pakke inspeksjon (SPI)) en brannvegg som holder rede på statusen av nettverksforbindelser (slik som TCP-strømmer, UDP-kommunikasjon eller ICMP-meldinger) som traverserer den. Brannveggen er programmert til å redegjøre legitime pakker for forskjellige typer etablerte forbindelser. Bare pakker som matcher en kjent etablert tilstand vil tillates å traversere gjennom brannveggen; andre vil bli forkastet.

*** Dersom det brukes mer enn èn brannvegg, må alle konfigureres for å tillate/nekte trafikk mellom hverandreEt alternativt tiltak som kan være lurt, er å spesifisere for brannvegg(ene) hvilke programmer som ikke skal nektes full tilgang til det offentlige nett (Internett), eller evt. åpne spesifiserte porter (som i teorien ikke blir sett på som spesielt sikkert) og la den ytterste (dersom du kjører mer enn én) brannvegg filtrere/droppe uønsket trafikk.

**** Det siste alternativet anbefales ikke for folk som har vanskelig for å forstå enkel, generell data-/nettverks-sikkerhet.

Innlegget er sammensatt av egne erfaringer og utdrag fra bl.a. www.wikipedia.org

28 January, 2008

Slackware Linux ^_^

The Slackware Linux Project.

Written by: kimerik.olsen@gmail.com

Created: Wednesday, January 4th 2008

Last change: Friday, February 22nd 2008 - 02:xx GMT+1

What is Slackware Linux?

The Official Release of Slackware Linux is an advanced Linux operating system, designed with the twin goals of ease of use and stability as top priorities. Including the latest popular software while retaining a sense of tradition, providing simplicity and ease of use alongside flexibility and power, Slackware brings the best of all worlds to the table.

Originally developed by Linus Torvalds in 1991, the UNIX®-like Linux operating system now benefits from the contributions of millions of users and developers around the world. Slackware Linux provides new and experienced users alike with a fully-featured system, equipped to serve in any capacity from desktop workstation to machine-room server. Web, ftp, and email servers are ready to go out of the box, as are a wide selection of popular desktop environments. A full range of development tools, editors, and current libraries is included for users who wish to develop or compile additional software.

The Slackware Philosophy.

Since its first release in April of 1993, the Slackware Linux Project has aimed at producing the most "UNIX-like" Linux distribution out there. Slackware complies with the published Linux standards, such as the Linux File System Standard. We have always considered simplicity and stability paramount, and as a result Slackware has become one of the most popular, stable, and friendly distributions available.

Slackware Overview.

Slackware Linux is a complete 32-bit multitasking "UNIX-like" system. It's currently based around the 2.6 Linux kernel series and the GNU C Library version 2.5 (libc6). It contains an easy to use installation program, extensive online documentation, and a menu-driven package system. A full installation gives you the X Window System, C/C++ development environments, Perl, networking utilities, a mail server, a news server, a web server, an ftp server, the GNU Image Manipulation Program, Mozilla Firefox Web Browser, plus many more programs. Slackware Linux can run on 486 systems all the way up to the latest x86 machines (but uses -mcpu=i686 optimization for best performance on i686-class machines like the P3, P4, and Duron/Athlon).

Who, and why?

The Slackware Linux Project started as a hobby-project (as many other linux distributions) of Minnesota State University Moorhead's student Patrick J. Volkerding, the founder and maintainer of the Slackware Linux distribution. Volkerding earned a Bachelor of Science in Computer Science from Minnesota State University Moorhead in 1993. He has worked for many years and continues to work on this popular and extremely stable distribution. Also known to many as "The Man" and as Slackware's BDFL (the Slackware "Benevolent Dictator for Life"), without Patrick, there would be no Slackware. Patrick is a SubGenius affiliate/member.

The use of the word Slack in "Slackware" is a homage to J. R. "Bob" Dobbs: "..I'll admit that it was SubGenius inspired. In fact, back in the 2.0 through 3.0 days we used to print a dobbshead on each CD."

History.

Introduction, excerpts from "Early Linux Distribution History" & www.wikipedia.org.

(...)

The first Linux distribution was created by Owen Le Blanc at the Manchester Computing Centre (MCC) in the north west of England. The first MCC Interim release, as it was known, was released in February, 1992. Soon after the first release came other distributions such as TAMU, created by individuals at Texas A&M University, Martin Junius's MJ, and H. J. Lu's small base system. If you want to read more about MCC Interim, click here.

This was followed shortly after by the Softlanding Linux System (referred to as "SLS" for the rest of this essay), founded by Peter McDonald, which was the first comprehensive distribution to contain elements such as X and TCP/IP, and the most popular of the original Linux distributions in the early 90's when Linux still used to be distributed on 3½inch floppy disks. SLS dominated the market until the developers made a decision to change the executable format from a.out to ELF. This was not a popular decision amongst SLS's user base at the time. (It is now considered as obsolete.)

If you would like to inspect the source-code for SLS, it can be found here.

It was quickly superseeded by the oldest currently surviving distributions based on it - Debian GNU/Linux and Slackware Linux.

(...)

Patrick Volkerding released a modified version of SLS, which he named Slackware. (The first Slackware release-version; 1.00, was released on July 16th 1993.) It was supplied on 3½inch floppy disk images that were made available by anonymous FTP from Moorhead University, and it quickly replaced SLS as the dominant Linux distribution at the time.

Slackware was the first Linux distribution to manufacture and sell Compact Discs containing the complete system as well as full source code for the software included, and was the base for several other distros that came out afterwards (Like SuSe Linux [1992], and more recently Arch Linux [2002]).

In 1999, Slackware's release numbers saw a large increment from 4 to 7. This was explained by Patrick Volkerding as a marketing effort to show that Slackware was as up-to-date as other more popular Linux distributions, many of which had release numbers of 6 at the time (such as Red Hat releasing each revision of its distribution with an increment of 4.1 to 5.0 instead of 3.1 to 3.2 as Slackware did).

In 2005, the GNOME desktop environment was removed from the pending future release, and turned over to community support and distribution. The removal of GNOME was seen by some in the Linux community as significant because the desktop environment is found in many Linux distributions. In lieu of this, several community-based projects began offering complete GNOME distributions for Slackware.

Design philosophy.

Many design choices in Slackware can be seen as examples of the KISS principle. In this context, "simple" refers to the viewpoint of system design, rather than ease of use. Most software in Slackware uses the configuration mechanisms supplied by the software's original authors; there are few distribution-specific mechanisms. This is the reason there are so few GUI tools to configure the system. This comes at the cost of user-friendliness. Critics consider the distribution time-consuming and difficult to learn. Advocates consider it flexible and transparent and like the experience gained from the learning process.

Package management.

Slackware's package management utilities can install, upgrade, and remove packages from local sources, but makes no attempt to track or manage dependencies, relying on the user to ensure that the system has all the supporting system libraries and programs required by the new package. If any of these are missing, there may be no indication until one attempts to use the newly installed software, or compile software from original source. Slackware packages are gzipped tarballs with filenames ending with .tgz.

The package contains the files that form part of the software being installed, as well as additional files for the benefit of the Slackware package management utilities. The files that form part of the software being installed are organized such that, when extracted into the root directory, their files are placed in their installed locations.

The other files are those placed under the install/ directory inside the package. Two files are commonly found in the install/ directory, which are the slack-desc and doinst.sh files (and more recently, the slack-required file, which is used to resolve dependencies using third-party package-management tools). These are not placed directly into the filesystem in the same manner as the other files in the package. The slack-desc file is a simple text file which contains a description of the package being installed. This is used when viewing packages using the package manager. The doinst.sh file is a shell script which is usually intended to run commands or make changes which could not be best made by changing the contents of the package (like symlinks and so fourth). This script is run at the end of the installation of a package.

Dependency resolution.

While Slackware itself does not incorporate tools to automatically resolve dependencies for the user by automatically downloading and installing them, some third-party software tools exist that can provide this function similar to the way APT does for Debian.

The third-party tool slapt-get does not provide dependency resolution for packages included within the Slackware distribution. It instead provides a framework for dependency resolution in Slackware compatible packages similar in fashion to the hand-tuned method APT utilizes. Several package sources and Slackware based distributions take advantage of this functionality.

Editor's note:

I really like and personally favor slapt-get. Allthough I generate and package my own packages from time to time, it is useful to have a semi-automated system-tool to install libraries and applications needed to compile certain software packages without having to locate and install all of them by hand. Mainly because it can quickly become a slow and tedious effort when a software package depends on a plethora of other non-standard libraries and applications that can easily be found in third-party package repositories on-line.

Slackware 9.1 included Swaret and slackpkg as extra packages on its second CD, but did not install either by default. Swaret was removed from the distribution as of Slackware 10.0 but is still available as a 3rd party package. Alternatively, NetBSD's pkgsrc provides support for Slackware, among other UNIX-like operating systems. pkgsrc provides dependency resolution for both binary and source packages.

Hardware architectures.

Slackware is primarily developed for the x86 PC hardware architecture. However there have previously been official ports to the DEC Alpha and SPARC architectures. As of 2005, there is an official port to the System/390 architecture. There are also unofficial ports to the ARM, Alpha, SPARC, PowerPC and x86-64 (the slamd64 and Bluewhite64 distributions support the use of 64-bit computing) architectures.

Third-party software repositories.

Repositories of user maintained, third-party Slackware packages are provided by linuxpackages.net and slacky.eu (but slacky.eu as of November-07 requires you to register within the Italian community to download and install/upgrade packages from their repository), which include more recent versions of some software, and some software that are not released in any form by the Slackware maintainers. These repositories are often used in conjunction with third-party package-management software, such as Swaret and slapt-get.

Use caution when downloading and installing third-party software from these repo's! Many of the packages are sometimes newer versions of native Slackware packages, and can retard your system's expected performance, or even give you a shocking experience next time you reboot your system, rendering your system unusable.

Dropline GNOME, GSB: GNOME SlackBuild, GWARE and Gnome-Slacky are projects intended to offer Slackware packages for the window management system: GNOME. These projects exist because Slackware does not officially include GNOME as of Slackware version 10.2, but a large number of users would prefer to have GNOME installed without having to go through the lengthy process of compiling it from source code. Another project for building GNOME is the SlackBot automated build script system.

From the Slackware-10.2 changelog:

+--------------------------+

Sat Mar 26 23:04:41 PST 2005

(...)gnome/*: Removed from -current, and turned over to community support and(...)

distribution. I'm not going to rehash all the reasons behind this, but it's

been under consideration for more than four years. There are already good

projects in place to provide Slackware GNOME for those who want it, and

these are more complete than what Slackware has shipped in the past. So, if

you're looking for GNOME for Slackware -current, I would recommend looking at

these two projects for well-built packages that follow a policy of minimal

interference with the base Slackware system:

http://gsb.sf.net

http://gware.sf.net

There is also Dropline, of course, which is quite popular. However, due to

their policy of adding PAM and replacing large system packages (like the

entire X11 system) with their own versions, I can't give quite the same sort

of nod to Dropline. Nevertheless, it remains another choice, and it's _your_

system, so I will also mention their project:

http://www.dropline.net/gnome/

Please do not incorrectly interpret any of this as a slight against GNOME

itself, which (although it does usually need to be fixed and polished beyond

the way it ships from upstream more so than, say, KDE or XFce) is a decent

desktop choice. So are a lot of others, but Slackware does not need to ship

every choice. GNOME is and always has been a moving target (even the

"stable" releases usually aren't quite ready yet) that really does demand a

team to keep up on all the changes (many of which are not always well

documented). I fully expect that this move will improve the quality of both

Slackware itself, and the quality (and quantity) of the GNOME options

available for it.

Folks, this is how open source is supposed to work. Enjoy. :-)

Editor's note:

Personally I prefer the native KDE environment included with Slackware, and always have. It has a wider user-community, more software packages available and it is based on Norwegian GUI-technology (Trolltech's Qt toolkit), which is pretty sweet since I'm Norwegian myself ;^)

As Patrick states; It's not a slight against GNOME at all. I, just don't personally favour it as my window manager of choice. That's one of the wonderful things about open source, the freedom OF choice!

(Compared to commercially available closed source software which shall remain nameless)

Extra Slackware-related stuff.

Linux Journal interview with patrick Volkerding

April 1st, 1994 by Phil Hughes

------Linux Journal: When did you first start working with Linux?

Pat: I first heard about Linux in late 1992 from a friend named Wes at a party in Fargo, North Dakota. I didn't download it right away, but when I needed to find a LISP interpreter for a project at school, I remembered seeing people mention clisp ran on Linux. So, I ended up downloading one of the versions of Peter MacDonald's SLS distribution.

Linux Journal: Describe what Slackware is?

Pat: Well, I guess I can assume we all know what Linux is. :^) Slackware consists of a basic Linux system (the kernel, shared libraries, and basic utilities), and a number of optional software packages such as the GNU C and C++ compilers, networking and mail handling software, and the X window system.

Linux Journal: Why did you decide to do a distribution?

Pat: That's a good one. I never really did decide to do a distribution. What happened was that my AI professor wanted me to show him how to install Linux so that he could use it on his machine at home, and share it with some graduate students who were also doing a lot of work in LISP. So, we went into the PC lab and installed the SLS version of Linux.

Having dealt with Linux for a few weeks, I'd put together a pile of notes describing all the little things that needed to be fixed after the main installation was complete. After spending nearly as much time going through the list and reconfiguring whatever needed it as we had putting the software on the machine in the first place, my professor looked at me and said, “Is there some way we can fix the install disks so that new machines will have these fixes right away?”. That was the start of the project. I changed parts of the original SLS installation scripts, fixing some bugs and adding a feature that installed important packages like the shared libraries and the kernel image automatically.

I also edited the description files on the installation disks to make them more informative. Most importantly, I went through the software packages, fixing any problems I found. Most of the packages worked perfectly well, but some needed help. The mail, networking, and uucp software had a number of incorrect file permissions that prevented it from functioning out of the box. Some applications would coredump without any explanation — for those I'd go out looking for source code on the net. SLS only came with source code for a small amount of the distribution, but often there would be new versions out anyway, so I'd grab the source for those and port them over. When I started on the task, I think the Linux kernel was at around 0.98pl4 (someone else may remember that better than I do...), and I put together improved SLS releases for my professor through version 0.99pl9. By this time I'd gotten ahead of SLS on maybe half of the packages in the distribution, and had done some reconfiguration on most of the remaining half. I'd done some coding myself to fix long-standing problems like a finger bug that would say users had `Never logged in' whenever they weren't online. The difference between SLS and Slackware was starting to be more than just cosmetic.

In May, or maybe as late as June of `93, I'd brought my own distribution up to the 4.4.1 C libraries and Linux kernel 0.99pl11A. This brought significant improvements to the networking and really seemed to stabilize the system. My friends at MSU thought it was great and urged me to put it up for FTP. I thought for sure SLS would be putting out a new version that included these things soon enough, so I held off for a few weeks. During this time I saw a lot of people asking on the net when there would be a release that included some of these new things, so I made a post entitled “Anyone want an SLS-like 0.99pl11A system?” I got a tremendous response to the post.

After talking with the local sysadmin at MSU, I got permission to open an anonymous FTP server on one of the machines - an old 3b2. I made an announcement and watched with horror as multitudes of FTP connections crashed the 3b2 over, and over, and over. Those who did get copies of the 1.00 Slackware release did say some nice things about it on the net. My archive space problems didn't last long, either. Some people associated with Walnut Creek CDROM (and ironically enough, members of the 386BSD core group) offered me the current archive space on ftp.cdrom.com.

Linux Journal: Why did you call it Slackware?

Pat: My friend J.R. “Bob” Dobbs suggested it. ;^) Although I've seen people say that it carries negative connotations, I've grown to like the name. It's what I started calling it back when it was really just a hacked version of SLS and I had no intention of putting it up for public retrieval. When I finally did put it up for FTP, I kept the name. I think I named it “Slackware” because I didn't want people to take it all that seriously at first.

It's a big responsibility setting up software for possibly thousands of people to use (and find bugs in). Besides, I think it sounds better than “Microsoft”, don't you?

Linux Journal: Some of the people out here in Seattle call them MicroSquish. :-) I admit that I initially avoided going from SLS to Slackware because I didn't take the name seriously. But the feedback I heard on the Internet pointed out why I should take it seriously. What did you expect to happen with the distribution?

Pat: I never planned for it to last as long as it has. I thought Peter MacDonald (of SLS) would take a look at what I was doing and would fix the problems with SLS. Instead, he claimed distribution rights on the Slackware install scripts since they were derived from ones included in SLS. I was allowed to keep what I had up for FTP, but told Peter I wouldn't make other changes to Slackware until I'd written new installation scripts to replace the ones that came from SLS. I wrote the new scripts, and after putting that much work into things I wasn't going to give up. I did everything I could to make Slackware the distribution of choice, integrating new software and upgrades into the release as fast as they came out. It's a lot of work, and sometimes I wonder how long I can go on for.

References.

This essay/article consists of several composed materials from various on-line sources: www.slackware.com , www.linuxjournal.com , www.wikipedia.org, www.slackwiki.org and http://www.linux-knowledge-portal.org/. And it is a tribute to reflect some of my (editor's) personal views, opinions and notions of this wonderful and versatile Linux distribution.